Web 1.0

Web 1.0 is a retronym referring to the first stage of the World Wide Web's evolution, from roughly 1989 to 2004. According to Graham Cormode and Balachander Krishnamurthy, "content creators were few in Web 1.0 with the vast majority of users simply acting as consumers of content".[14] Personal web pages were common, consisting mainly of static pages hosted on ISP-run web servers, or on free web hosting services such as Tripod and the now-defunct GeoCities.[15][16] With Web 2.0, it became common for average web users to have social-networking profiles (on sites such as Myspace and Facebook) and personal blogs (sites like Blogger, Tumblr and LiveJournal) through either a low-cost web hosting service or through a dedicated host. In general, content was generated dynamically, allowing readers to comment directly on pages in a way that was not common previously.[citation needed]

Some Web 2.0 capabilities were present in the days of Web 1.0, but were implemented differently. For example, a Web 1.0 site may have had a guestbook page for visitor comments, instead of a comment section at the end of each page (typical of Web 2.0). During Web 1.0, server performance and bandwidth had to be considered—lengthy comment threads on multiple pages could potentially slow down an entire site. Terry Flew, in his third edition of New Media, described the differences between Web 1.0 and Web 2.0 as a

"move from personal websites to blogs and blog site aggregation, from publishing to participation, from web content as the outcome of large up-front investment to an ongoing and interactive process, and from content management systems to links based on "tagging" website content using keywords (folksonomy)."

Flew believed these factors formed the trends that resulted in the onset of the Web 2.0 "craze".[17]

Characteristics

Some common design elements of a Web 1.0 site include:[18]

- Static pages rather than dynamic HTML.[19]

- Content provided from the server's filesystem rather than a relational database management system (RDBMS).

- Pages built using Server Side Includes or Common Gateway Interface (CGI) instead of a web application written in a dynamic programming language such as Perl, PHP, Python or Ruby.[clarification needed]

- The use of HTML 3.2-era elements such as frames and tables to position and align elements on a page. These were often used in combination with spacer GIFs.[citation needed]

- Proprietary HTML extensions, such as the <blink> and <marquee> tags, introduced during the first browser war.

- Online guestbooks.

- GIF buttons, graphics (typically 88×31 pixels in size) promoting web browsers, operating systems, text editors and various other products.

- HTML forms sent via email. Support for server side scripting was rare on shared servers during this period. To provide a feedback mechanism for web site visitors, mailto forms were used. A user would fill in a form, and upon clicking the form's submit button, their email client would launch and attempt to send an email containing the form's details. The popularity and complications of the mailto protocol led browser developers to incorporate email clients into their browsers.[20]

Web 2.0

The term "Web 2.0" was coined by Darcy DiNucci, an information architecture consultant, in her January 1999 article "Fragmented Future":[3][21]

"The Web we know now, which loads into a browser window in essentially static screenfuls, is only an embryo of the Web to come. The first glimmerings of Web 2.0 are beginning to appear, and we are just starting to see how that embryo might develop. The Web will be understood not as screenfuls of text and graphics but as a transport mechanism, the ether through which interactivity happens. It will [...] appear on your computer screen, [...] on your TV set [...] your car dashboard [...] your cell phone [...] hand-held game machines [...] maybe even your microwave oven."

Writing when Palm Inc. introduced its first web-capable personal digital assistant (supporting Web access with WAP), DiNucci saw the Web "fragmenting" into a future that extended beyond the browser/PC combination it was identified with. She focused on how the basic information structure and hyper-linking mechanism introduced by HTTP would be used by a variety of devices and platforms. As such, her "2.0" designation refers to the next version of the Web that does not directly relate to the term's current use.

The term Web 2.0 did not resurface until 2002.[22][23][24] Companies such as Amazon, Facebook, Twitter, and Google, made it easy to connect and engage in online transactions. Web 2.0 introduced new features, such as multimedia content and interactive web applications, which mainly consisted of two-dimensional screens.[25] Kinsley and Eric focus on the concepts currently associated with the term where, as Scott Dietzen puts it, "the Web becomes a universal, standards-based integration platform".[24] In 2004, the term began to popularize when O'Reilly Media and MediaLive hosted the first Web 2.0 conference. In their opening remarks, John Battelle and Tim O'Reilly outlined their definition of the "Web as Platform", where software applications are built upon the Web as opposed to upon the desktop. The unique aspect of this migration, they argued, is that "customers are building your business for you".[26] They argued that the activities of users generating content (in the form of ideas, text, videos, or pictures) could be "harnessed" to create value. O'Reilly and Battelle contrasted Web 2.0 with what they called "Web 1.0". They associated this term with the business models of Netscape and the Encyclopædia Britannica Online. For example,

"Netscape framed 'the web as platform' in terms of the old software paradigm: their flagship product was the web browser, a desktop application, and their strategy was to use their dominance in the browser market to establish a market for high-priced server products. Control over standards for displaying content and applications in the browser would, in theory, give Netscape the kind of market power enjoyed by Microsoft in the PC market. Much like the 'horseless carriage' framed the automobile as an extension of the familiar, Netscape promoted a 'webtop' to replace the desktop, and planned to populate that webtop with information updates and applets pushed to the webtop by information providers who would purchase Netscape servers.[27]"

In short, Netscape focused on creating software, releasing updates and bug fixes, and distributing it to the end users. O'Reilly contrasted this with Google, a company that did not, at the time, focus on producing end-user software, but instead on providing a service based on data, such as the links that Web page authors make between sites. Google exploits this user-generated content to offer Web searches based on reputation through its "PageRank" algorithm. Unlike software, which undergoes scheduled releases, such services are constantly updated, a process called "the perpetual beta". A similar difference can be seen between the Encyclopædia Britannica Online and Wikipedia – while the Britannica relies upon experts to write articles and release them periodically in publications, Wikipedia relies on trust in (sometimes anonymous) community members to constantly write and edit content. Wikipedia editors are not required to have educational credentials, such as degrees, in the subjects in which they are editing. Wikipedia is not based on subject-matter expertise, but rather on an adaptation of the open source software adage "given enough eyeballs, all bugs are shallow". This maxim is stating that if enough users are able to look at a software product's code (or a website), then these users will be able to fix any "bugs" or other problems. The Wikipedia volunteer editor community produces, edits, and updates articles constantly. Web 2.0 conferences have been held every year since 2004, attracting entrepreneurs, representatives from large companies, tech experts and technology reporters.

The popularity of Web 2.0 was acknowledged by 2006 TIME magazine Person of The Year (You).[28] That is, TIME selected the masses of users who were participating in content creation on social networks, blogs, wikis, and media sharing sites.

In the cover story, Lev Grossman explains:

"It's a story about community and collaboration on a scale never seen before. It's about the cosmic compendium of knowledge Wikipedia and the million-channel people's network YouTube and the online metropolis MySpace. It's about the many wresting power from the few and helping one another for nothing and how that will not only change the world but also change the way the world changes."

Characteristics

Instead of merely reading a Web 2.0 site, a user is invited to contribute to the site's content by commenting on published articles, or creating a user account or profile on the site, which may enable increased participation. By increasing emphasis on these already-extant capabilities, they encourage users to rely more on their browser for user interface, application software ("apps") and file storage facilities. This has been called "network as platform" computing.[5] Major features of Web 2.0 include social networking websites, self-publishing platforms (e.g., WordPress' easy-to-use blog and website creation tools), "tagging" (which enables users to label websites, videos or photos in some fashion), "like" buttons (which enable a user to indicate that they are pleased by online content), and social bookmarking.

Users can provide the data and exercise some control over what they share on a Web 2.0 site.[5][29] These sites may have an "architecture of participation" that encourages users to add value to the application as they use it.[4][5] Users can add value in many ways, such as uploading their own content on blogs, consumer-evaluation platforms (e.g. Amazon and eBay), news websites (e.g. responding in the comment section), social networking services, media-sharing websites (e.g. YouTube and Instagram) and collaborative-writing projects.[30] Some scholars argue that cloud computing is an example of Web 2.0 because it is simply an implication of computing on the Internet.[31]

Edit box interface through which anyone could edit a

Wikipedia article

Web 2.0 offers almost all users the same freedom to contribute,[32] which can lead to effects that are varyingly perceived as productive by members of a given community or not, which can lead to emotional distress and disagreement. The impossibility of excluding group members who do not contribute to the provision of goods (i.e., to the creation of a user-generated website) from sharing the benefits (of using the website) gives rise to the possibility that serious members will prefer to withhold their contribution of effort and "free ride" on the contributions of others.[33] This requires what is sometimes called radical trust by the management of the Web site.

Encyclopaedia Britannica calls Wikipedia "the epitome of the so-called Web 2.0" and describes what many view as the ideal of a Web 2.0 platform as "an egalitarian environment where the web of social software enmeshes users in both their real and virtual-reality workplaces."[34]

According to Best,[35] the characteristics of Web 2.0 are rich user experience, user participation, dynamic content, metadata, Web standards, and scalability. Further characteristics, such as openness, freedom,[36] and collective intelligence[37] by way of user participation, can also be viewed as essential attributes of Web 2.0. Some websites require users to contribute user-generated content to have access to the website, to discourage "free riding".

A list of ways that people can volunteer to improve Mass Effect Wiki on

Wikia, an example of content generated by users working collaboratively

The key features of Web 2.0 include:[citation needed]

- Folksonomy – free classification of information; allows users to collectively classify and find information (e.g. "tagging" of websites, images, videos or links)

- Rich user experience – dynamic content that is responsive to user input (e.g., a user can "click" on an image to enlarge it or find out more information)

- User participation – information flows two ways between the site owner and site users by means of evaluation, review, and online commenting. Site users also typically create user-generated content for others to see (e.g., Wikipedia, an online encyclopedia that anyone can write articles for or edit)

- Software as a service (SaaS) – Web 2.0 sites developed APIs to allow automated usage, such as by a Web "app" (software application) or a mashup

- Mass participation – near-universal web access leads to differentiation of concerns, from the traditional Internet user base (who tended to be hackers and computer hobbyists) to a wider variety of users, drastically changing the audience of internet users.

Technologies

The client-side (Web browser) technologies used in Web 2.0 development include Ajax and JavaScript frameworks. Ajax programming uses JavaScript and the Document Object Model (DOM) to update selected regions of the page area without undergoing a full page reload. To allow users to continue interacting with the page, communications such as data requests going to the server are separated from data coming back to the page (asynchronously).

Otherwise, the user would have to routinely wait for the data to come back before they can do anything else on that page, just as a user has to wait for a page to complete the reload. This also increases the overall performance of the site, as the sending of requests can complete quicker independent of blocking and queueing required to send data back to the client. The data fetched by an Ajax request is typically formatted in XML or JSON (JavaScript Object Notation) format, two widely used structured data formats. Since both of these formats are natively understood by JavaScript, a programmer can easily use them to transmit structured data in their Web application.

When this data is received via Ajax, the JavaScript program then uses the Document Object Model to dynamically update the Web page based on the new data, allowing for rapid and interactive user experience. In short, using these techniques, web designers can make their pages function like desktop applications. For example, Google Docs uses this technique to create a Web-based word processor.

As a widely available plug-in independent of W3C standards (the World Wide Web Consortium is the governing body of Web standards and protocols), Adobe Flash was capable of doing many things that were not possible pre-HTML5. Of Flash's many capabilities, the most commonly used was its ability to integrate streaming multimedia into HTML pages. With the introduction of HTML5 in 2010 and the growing concerns with Flash's security, the role of Flash became obsolete, with browser support ending on December 31, 2020.

In addition to Flash and Ajax, JavaScript/Ajax frameworks have recently become a very popular means of creating Web 2.0 sites. At their core, these frameworks use the same technology as JavaScript, Ajax, and the DOM. However, frameworks smooth over inconsistencies between Web browsers and extend the functionality available to developers. Many of them also come with customizable, prefabricated 'widgets' that accomplish such common tasks as picking a date from a calendar, displaying a data chart, or making a tabbed panel.

On the server-side, Web 2.0 uses many of the same technologies as Web 1.0. Languages such as Perl, PHP, Python, Ruby, as well as Enterprise Java (J2EE) and Microsoft.NET Framework, are used by developers to output data dynamically using information from files and databases. This allows websites and web services to share machine readable formats such as XML (Atom, RSS, etc.) and JSON. When data is available in one of these formats, another website can use it to integrate a portion of that site's functionality.

Concepts

Web 2.0 can be described in three parts:

- Rich web application — defines the experience brought from desktop to browser, whether it is "rich" from a graphical point of view or a usability/interactivity or features point of view.[contradictory]

- Web-oriented architecture (WOA) — defines how Web 2.0 applications expose their functionality so that other applications can leverage and integrate the functionality providing a set of much richer applications. Examples are feeds, RSS feeds, web services, mashups.

- Social Web — defines how Web 2.0 websites tend to interact much more with the end user and make the end user an integral part of the website, either by adding his or her profile, adding comments on content, uploading new content, or adding user-generated content (e.g., personal digital photos).

As such, Web 2.0 draws together the capabilities of client- and server-side software, content syndication and the use of network protocols. Standards-oriented Web browsers may use plug-ins and software extensions to handle the content and user interactions. Web 2.0 sites provide users with information storage, creation, and dissemination capabilities that were not possible in the environment known as "Web 1.0".

Web 2.0 sites include the following features and techniques, referred to as the acronym SLATES by Andrew McAfee:[38]

You received this message because you are subscribed to the Google Groups "1top-oldtattoo-2" group.

To unsubscribe from this group and stop receiving emails from it, send an email to

.

.

Moreno's sociogram of a 2nd grade class

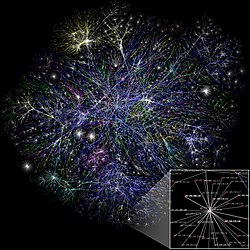

Moreno's sociogram of a 2nd grade class  Self-organization of a network, based on Nagler, Levina, & Timme (2011)[31]

Self-organization of a network, based on Nagler, Levina, & Timme (2011)[31]  Centrality

Centrality  …..

…..